A topic that has been generating a lot of interest within the Citrix community is planning and designing a XenDesktop 7.1 solution on Microsoft Hyper-V 2012 R2. Citrix Consulting is releasing a new update to the Citrix Virtual Desktop Handbook, which includes design decisions when deploying XenDesktop 7.1 on Hyper-V 2012 R2 and System Center Virtual Machine Manager 2012 R2. In advance of the new release, I will be publishing a series of Hyper-V 2012 R2 blogs, each of which will focus on a different topic covered in the handbook. This week, I’m going to take a look at some of the new Hyper-V 2012 R2 networking features which you should consider during a XenDesktop design.

Some noticeable networking changes in Windows Server 2012 R2 which will have a direct impact on the design of XenDesktop 7.1 on Hyper-V 2012 R2.

- NIC teaming. NIC teaming in Hyper-V 2008 R2 is not natively supported in the operating system, and requires third party NIC management software. This has changed in Windows Server 2012/2012 R2. With native support built into the operating system, NIC teaming is easier to setup and apply to Hyper-V servers. I recommend configuring NIC teaming on all Hyper-V hosts used in XenDesktop solutions, particularly hosts that are clustered. It’s possible to create virtual NIC teams at the VM level too, but that adds complexity to the solution. For most XenDesktop deployments creating the team at the physical layer will be sufficient.

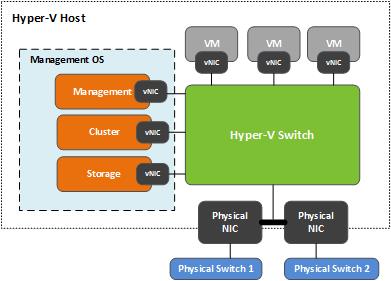

- Virtual NICs in parent partition. In Hyper-V 2008 R2 you are limited to one virtual NIC in the parent partition. In Hyper-V 2012 R2, you can create multiple virtual NICs in the parent partition. This is very useful when you have a limited number of physical NICs available, for example- blade servers. With XenDesktop on Hyper-V there are typically five networks to consider: management, cluster, live migration, VM network and storage. Hyper-V 2008 R2, requires physical NICs to isolate each of these networks. On Hyper-V 2012 R2, you can get by with a pair of teamed physical NICs and four virtual NICs in the parent partition. For example, consider a blade server that has two physical 10Gbps NICs. Create the NIC team first, then enable the Hyper-V role. The Hyper-V virtual switch will be associated with the NIC team. Virtual NICs can be created (using PowerShell commands) for the management, cluster, live migration and storage (if using ISCSI) networks in the parent partition. Next I recommend configuring VLAN bindings on the virtual NICs to isolate the various traffic (using PowerShell as well). For more information about the PowerShell commands, please see the Microsoft TechNet article – What’s New in Hyper-V Virtual Switch. Once the virtual NICs are created, the Hyper-V host will look something like the image below with all virtual NICs routing through the Hyper-V virtual switch.

- SR-IOV. Hyper-V 2012/2012 R2 supports Single Root I/O Virtualization (SR-IOV) capable network adapters. This allows VMs to directly access the physical network adapter which improves I/O performance. When SR-IOV is enabled however, the traffic bypasses the Hyper-V virtual switch and cannot take advantage of redundancy provided by the NIC team associated with the Hyper-V virtual switch. Teaming SR-IOV enabled NICs is not supported, therefore enabling SR-IOV in XenDesktop is only recommended on networks dedicated to live migration. You gain performance but lose redundancy so use with caution.

Some key XenDesktop network design decisions:

- Which type of physical network adapters to use?To improve Hyper-V 2012 R2 performance consider using physical network adapters with the following properties:

- 1Gbps or greater (10Gbps is preferred for larger deployments)

- Offload hardware support – Reduces CPU usage of network IOs. Hyper-V supports LargeSend Offload and TCP checksum offload.

- Receive Side Scaling (RSS) support – Improves performance of multiprocessor Hyper-V hosts by allowing network receive processing to be distributed across CPUs.

- Dynamic Virtual Machine Queue (dVMQ) support – This also improves the performance of multiprocessor Hyper-V hosts by distributing the management of traffic destined to specific VM queues across CPUs.

- How many physical networks to plan for? For most deployments there will be four networks: management, cluster, live migration and VM network (assuming that the storage traffic is using an HBA connection). Using Hyper-V hosts with a pair of teamed 10Gbps network adapters should be sufficient.

- Which type of virtual network adapters to use? Hyper-V 2012 R2 supports three types of virtual adapters:

- Standard. Fast performing synthetic virtual adapter, which is used by default when creating VMs in Hyper-V. This adapter should be selected when creating VMs using Machine Creation Services.

- Legacy. Emulated multiport DEC 21140 10/100 Mbps adapter. It does not perform as well as the standard adapter, but it is required when creating VMs using Provisioning Services since it uses PXE to boot the desktops. A new feature added to Provisioning Services 7 is the ability for VMs to switch over from the legacy adapter to the standard adapter after the VM boots. In order for the switchover to occur the standard adapter must be on the same subnet as the legacy adapter. The issue with this configuration is that it requires your VMs to have dual NICs, and doubles the number of DHCP leased IP addresses required. To keep the design and management simple I recommend using only the legacy NIC when using Provisioning Services. Note: Hyper-V 2012 R2 supports Generation 2 virtual machines which can PXE boot using the standard adapter, however XenDesktop 7 does not support Hyper-V Generation 2 VMs.

- Fibre Channel. Special use case adapter for VMs requiring direct access to virtual SANs. This virtual network adapter is not supported on virtual machines running the Windows desktop operating system. It will be a rare circumstance to ever use it with XenDesktop 7 deployments.

- Should I use separate vLANs for the virtual desktops? For security purposes keeping the virtual desktops separate from the infrastructure network is considered a good practice.

- Which type of virtual networks to configure? Hyper-V 2012/2012 R2 allows you to create networks that are external, internal or private. External allows VMs to communicate with anything on the physical network, and is the only virtual network type needed for most XenDesktop deployments. Internal allows VMs on the same host to communicate with each and the Hyper-V host. Private allows VMs on the same host to only communicate with each other. Both internal and private networks will not be practical for most XenDesktop deployments.

Next week we will take a look at some new storage features in Hyper-V 2012 R2 and storage design considerations for XenDesktop 7.1.

Ed Duncan – Senior Consultant

Worldwide Consulting

Desktop & Apps Team

Virtual Desktop Handbook

Project Accelerator

Follow @CTXConsulting